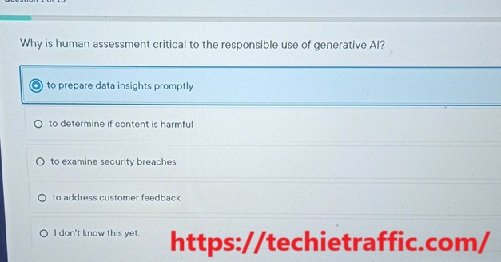

Why is Human Assessment Critical to the Responsible Use of Generative AI?

Generative AI technologies have made significant advancements in recent years, offering incredible potential across industries such as healthcare, entertainment, education, and finance. However, the rapid growth of these technologies brings about complex challenges. One of the most pressing concerns is the responsible use of AI, particularly generative AI why is human assessment. As AI systems become more autonomous and powerful, the need for human oversight has never been more critical.

In this article, we will explore the crucial role that human assessment plays in ensuring the responsible, ethical, and safe deployment of generative AI. We will look at the challenges associated with AI, the limitations of AI itself, and why human judgment is essential for minimizing risks and maximizing benefits.

Table of Contents

- Introduction to Generative AI

- Understanding Human Assessment in AI

- Challenges with Generative AI

- Bias and Fairness

- Accountability and Transparency

- Ethical Concerns

- Why Human Assessment is Critical

- Safety and Risk Mitigation

- Ethical Decision Making

- Accountability

- Best Practices for Human Assessment in Generative AI

- Continuous Monitoring

- Bias Detection and Correction

- Transparency and Explainability

- The Role of Human Experts in AI Development

- Case Studies of Human Assessment in Generative AI

- Conclusion

- Key Takeaways

1. Introduction to Generative AI

Generative AI refers to artificial intelligence systems capable of creating new content, such as images, text, music, and even video, based on input data. Examples of generative AI models include OpenAI’s GPT series, Google’s DeepMind, and various image-generating tools like DALL·E. These models work by learning patterns from large datasets and then using these learned patterns to generate novel outputs.

Generative AI has shown tremendous potential, from creating realistic images and writing coherent articles to generating personalized music and video content. However, the very capabilities that make generative AI so powerful also make it potentially dangerous if not carefully controlled and assessed.

2. Understanding Human Assessment in AI

Human assessment in the context of AI refers to the involvement of human judgment and oversight in the design, training, testing, and deployment of AI systems. While AI systems can automate processes and generate outputs, they still require human input to ensure that their decisions and actions align with ethical standards, legal requirements, and societal norms.

Human assessment involves several aspects:

- Monitoring: Continuously evaluating the performance of AI models to ensure they are functioning as expected.

- Validation: Ensuring that the outputs of AI systems are accurate, fair, and in line with ethical guidelines.

- Intervention: Stepping in when AI systems make decisions that could lead to harm, bias, or unintended consequences.

3. Challenges with Generative AI

While generative AI offers significant benefits, there are a number of challenges that make its use potentially harmful or irresponsible without proper human oversight.

Bias and Fairness

AI models are only as good as the data they are trained on. If the training data is biased, the AI system will likely produce biased results. For instance, a generative AI system trained on a dataset that lacks diversity may generate content that perpetuates harmful stereotypes or fails to represent certain groups accurately. This can lead to significant issues, especially when generative AI is used in sensitive areas like hiring, law enforcement, or healthcare.

Accountability and Transparency

Generative AI often operates as a “black box,” meaning its decision-making processes are not transparent or easily understood by humans. This lack of transparency makes it difficult to hold AI systems accountable when they make harmful or unethical decisions. Without proper human assessment, it becomes challenging to trace the origins of mistakes or biases in the output.

Ethical Concerns

Generative AI can also create content that is harmful or misleading. For example, AI-generated fake news, deepfakes, and other forms of disinformation can spread quickly on social media and other platforms, causing real-world harm. Without human oversight, these technologies could be used irresponsibly, with damaging consequences for individuals and society.

4. Why Human Assessment is Critical

Human assessment is critical to the responsible use of generative AI for several reasons. Below are some key areas where human involvement is necessary:

Safety and Risk Mitigation

AI models, especially generative ones, can produce unexpected outputs that may pose safety risks. For example, an AI-generated image or video could be used maliciously to deceive others, or an AI-generated text could incite violence or harm. Humans must be involved in assessing the potential risks of AI-generated content and intervene when necessary to prevent harm.

Ethical Decision Making

AI systems lack the ability to make ethical decisions in the way humans do. They operate based on data and algorithms, which may not always align with moral or ethical considerations. Humans need to assess whether the actions and outputs of AI systems align with societal norms and ethical principles, ensuring that AI is used responsibly.

Accountability

Humans must be held accountable for the outcomes of AI systems. If an AI system makes a harmful decision, it is essential to identify who is responsible for that decision—whether it’s the developers who created the AI, the organizations deploying it, or others involved. Human oversight helps establish accountability and ensures that any negative consequences are addressed appropriately.

5. Best Practices for Human Assessment in Generative AI

To ensure the responsible use of generative AI, it is important to implement best practices for human assessment. Here are some strategies that can be adopted:

Continuous Monitoring

AI systems should be monitored continuously to ensure they are performing as expected. This includes checking for any deviations from ethical guidelines, unexpected biases, or unsafe behaviors. Regular audits of AI systems can help detect issues early before they escalate.

Bias Detection and Correction

One of the key challenges in AI systems is bias. Human assessors should be involved in detecting and correcting bias in AI models. This involves analyzing the data used to train the model, as well as evaluating the outputs to ensure they are fair and unbiased.

Transparency and Explainability

Generative AI models should be made more transparent. This means providing clear explanations of how they generate outputs and ensuring that humans can understand the reasoning behind their decisions. Explainability helps foster trust and accountability, allowing humans to intervene when necessary.

6. The Role of Human Experts in AI Development

Human experts play a critical role in AI development, from data scientists and engineers to ethicists and legal professionals. These experts are responsible for ensuring that AI systems are designed and implemented in ways that minimize harm and maximize benefits. Their role includes:

- Designing ethical AI frameworks and guidelines

- Reviewing AI models for fairness and accountability

- Assessing the impact of AI on various stakeholders

- Ensuring compliance with legal and regulatory standards

7. Case Studies of Human Assessment in Generative AI

Case Study 1: Deepfakes and Content Moderation

One of the most prominent examples of human assessment in AI is in the fight against deepfakes. Deepfake videos, which use AI to create realistic but fake images and videos, can cause significant harm. Platforms like YouTube and Facebook have implemented human review processes to flag and remove deepfake content. While AI can help detect deepfakes, human assessors are still needed to evaluate the context and determine whether the content violates platform policies.

Case Study 2: AI in Hiring

AI tools are increasingly being used in hiring processes, such as screening resumes and evaluating candidates. However, these tools can be biased if not properly assessed by humans. Human oversight is necessary to ensure that the AI models used in hiring are fair, transparent, and do not discriminate against certain groups.

8. Conclusion

Generative AI has the potential to revolutionize industries and change the way we interact with technology. However, without responsible oversight and human assessment, the risks associated with these powerful systems could outweigh the benefits. Human involvement is essential in ensuring that AI systems are used ethically, safely, and transparently. By implementing best practices for human assessment, we can mitigate the risks of bias, unethical behavior, and harmful consequences, ensuring that generative AI is a force for good in society.

Read More Also: AI Chat Girlfriend: How Artificial Intelligence Is Revolutionizing Relationships and Social Interaction